|

|

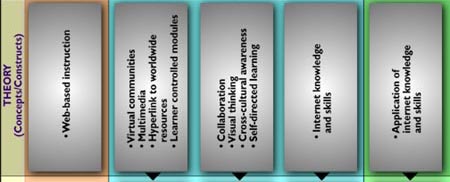

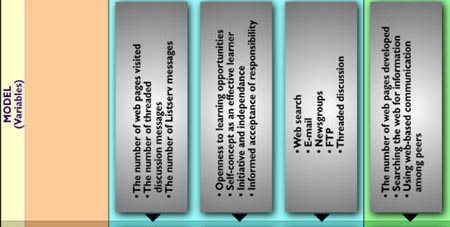

The input-process-output structural framework (see Figure 1) is a

specification of how different input, intermediate, and output

variables form causal relationships in a system. The structural

framework has four levels of abstraction: paradigm, theory, model, and

measurement.

The highest layer of the framework has the highest of abstraction and vice versa. The degree of specification increases as the layer goes down. A theory is an implementation of a paradigm, a model is a specification of a theory, and a measurement is the quantification or the empirical representation of a model. Implementations of the structural framework may vary in the theory, model, and measurement levels. However, the emphasis on input-process-output is firmly embedded in the paradigm level.

The proposed structural framework endorses a paradigm that emphasizes input-process-output of human learning inspired by cognitive psychology. This paradigm challenges the input-output emphasis under a "black-box" approach. Under a "black box" approach, an input, which is usually a treatment, is delivered to the intended audience. Afterwards, the output, which is usually an observable outcome, is evaluated and the effectiveness of the treatment is inferred. This input-output approach leaves many important questions unanswered such as "What are the properties of the instructional media?" "What effects are resulted from these properties?" "How do the learners use the program?" and "How do the learners process the information?" This "black box" approach could be traced back to Behaviorism and classic learning theory, in which the learner or the subject is treated as a black box. In Behaviorism, only stimulus (input) and responses (output) are emphasized for controlling behaviors. In this system the details of underlying structure, mechanism, and dynamics are either unknown or regarded as unimportant (Fontana, 1984). With the increasing popularity of cognitive psychology, questions about human mental structure and process are being addressed. For instance, "How is knowledge represented?" "How does an individual acquire new knowledge?" (Kellogg, 1995) Because of the process-orientation of cognitive psychology, educational psychologists pay much attention to learning processes (Anderson, 1990; West, Farmer, & Wolff, 1991). In the context of Web-based instruction, questions about relationships between instructional media and learning processes should be raised. For example, "How does hyperlinking enhance connectivity of human schema? How does asynchronous collaboration on the Internet improve intrinsic motivation?"

The second dimension is the input-process-output stream. As seen in Figure 1, this streaming can be viewed as a horizontal process:

Different use of those resources may contribute to different learning processes and outcomes. There are two ways to define variables and to develop instruments for the above media properties. The first way is to create another version of treatment without these media properties and randomly assign users to this control group. In this fashion, group membership is a variable. Another way is to treat the tools which carry specific media properties as numeric variables and track the frequency of using the tools. For example, the number of chat, threaded discussion, and ListServ messages could indicate the utilization of virtual communities. The number of time spent and the number of page accessed within the WBI could reflect the user engagement. Several web traffic tracking technologies can be used to collect data for studying user patterns on WBI (Yu, Jannasch-Pennell, DiGangi, & Wasson, 1998). .

However, using standardized instruments is not absolutely flawless. Usually the variables that are said to be latent dimensions of a broader construct are extracted by factor analysis. Kelley (1940) warned that variables resulting from factor analysis are not timeless, spaceless, populationless truth. Thus, it is recommended that evaluators use standardized inventories as a reference for developing instruments adaptive to their local treatment and audience.

Fontana, D. (Eds.). (1984). Behaviorism and learning theory in education. Edinburgh: Academic Press. Guglielmino, L.M. (1977). Development of the Self-directed Learning Readiness Scale, unpublished doctoral dissertation, University of Georgia, Athens, GA. Guglielmino, P. J.; Murdick, R. G. (1997). Self-directed learning: The quiet revolution in corporate training and development. Advanced Management Journal, 62, 10-18. Kelley, T. L. (1940). Comment on Wilson and Worcester's Note on Factor Analysis. Psychometrika, 5, 117-120. Kellogg, R. T. (1995). Cognitive psychology. Thousands Oaks: Sage Publications. Kubiszyn, T., & Borich, G. (1993). Educational testing and measurement: Classroom application and practice. New York: Harper Collins College Publishers. Kuhn, T. S. (1962). The structure of scientific revolutions. Chicago: University of Chicago Press. Svanum, S., Chen, S. H., & Bublitz, S. (1997). Internet-based instruction of the principle of base rate and prediction: A demonstration project. Behavior Research Methods, Instruments, & Computers, 29, 228-231. Swigger, K. M., Brazile, R., Lopez, V., & Livingston, A. (1997). The virtual collaborative university. Computers Education, 29, 55-61. West, C. K., Farmer, J. A., & Wolff, P. M. (1991). Instructional design: Implications from cognitive sciences. Englewood Cliffs, New Jersey: Prentice Hall. Yu, C. H., Jannasch-Pennell, A., DiGangi, S., & Wasson, B. (1999). Using On-line interactive statistics for evaluating Web-based instruction, Journal of Educational Media International, 35, 157-161.

NavigationIndexSimplified NavigationTable of ContentsSearch EngineContact

|